Programming Languages Mentoring Workshop (PLMW)

Good morning from POPL 2015 in Mumbai, India.

Throughout this blog, * denotes a notation heavy segment in the talk. These can be difficult to summaries quickly without typesetting. See the authors paper online for now and I'll try to find speaker slides at the later time.

PLMW Organizers - Intro

This is the 4rd PLMW workshop and we are very pleased to see you all. Mentoring workshops are now being much more common. This year we have funded 75 students. We would like the thank the speakers, sponsors and organisers (including Annabel :)

Video from Derek

POPL proceedings are now available online

Nate Foster (Cornell University) - You and Your Graduate Research

Prelude:

- doing a PhD is not easy, many do not finish. This is a survivors story

- some of my comments many be US specific

- acknowledgements (including Jorge Cham from PhD comics :))

Motivation

THis is useful from everyone, not just undergraduates.

Why you shouldn't do it?

- money: nick shows a graph of salaries in university, football coaching is clearly the right way to go for a big salary.

- start-ups: Tech start-ups are now a top topic, Oculus VR example and you don't need a PhD for this

- respect: Education is highly valued and there's respect from degrees, but no one will call you doctor exact your mom.

- to stay in school: Undergraduate was fun so lets stay in school, a PhD program is totally different.

- being a professor: Let all become a professor, demonstration of the numbers of academics verse other careers

Why you should?

- opens opportunities

- cool jobs in industry: leadership, work on cool problems, you can work on large systems and with real users

- freedom: applies on any level, freedom to choose interesting problems, opportunities to work on the big problems

What is a PhD?

Comparing degrees at different levels:

- high school: basics for life

- undergrad: broad knowledge in a field

- professional: advanced knowledge and practical skills

- PhD: advanced knowledge and a research contribution.

PhD is open edge

PhD is a transformation - we start with intelligent people who aren't researchers. Its a apprentice based scheme to being a researcher. Its not an easy or painless process.

PhD Success

pick an institution

The community that your in will shape your work,

Very important factors include advisor, opportunities and peers. Typically less important factors are finances, institution and location.

Dive into Research

Don't be distracted by other things e.g. classes and teaching

Pick great problems

"It is better to do the right problem the wrong way than the wrong problem the right way" - Hamming

Stay Engaged

Working with others means it easier, as you can motivate each other.

Know when its time to switch topics - good research are versatile and able to switch quickly

Independence - you need to know when the time is right to go rogue and ignore your adviser

Reaching escape velocity to graduate

Peter Müller (ETH Zürich) - Building automatic program verifiers

verification: Given a program P and a specification S, prove that all execution of P satisfies S

automatic verification: Develop an algorithm that decide whether P satisfies S

We can't prove halting => we are finished :)

Lets start again ...

Peter demonstrates of verification tool called Viper, using the typical account balance and transfer example. The verifier gives two error, we add preconditions and the verifier successes. We then use the verifier for thread safety, again fixing a concurrency bug with locking.

Automatic verification is not b/w. We can have semi-automatic verification: we can guide the verifier. The complexity of the code varies greatly, most success in the area focuses on a small area. We build verifiers from weaker guarantees. Verification can working with modularity (or maybe it will not). Varying complexity of properties.

7 Stage process

Define the research goals

What is the state of the art?

Find examples the area in the problem space that is not currently handled (well) by current verifiers.

Find Reasoning Principle

How to explain the correctness of the code to a friend? For the loop, we would use a loop invariant.

This is very different to techniques like model checking, I wouldn't say to friend "I have checked 15 billion possible evaluation paths and are all valid".

Break Down Arguments

Decompose the correctness argument: what do I need to prove for each program and what can I reuse between programs. How can I modularise the program.

Design Specification Language

Designing a language to express intended behaviour, we must know who the user will be and their level of experience. Find the right compromise between expressibility and simplicity, that is right for the intended audience.

Design Program Logic

Determine which properties need to be checked and which properties may be assumed.

Automate Proof Search

Utilize existing infrastructure like SAT and SMT solvers. Develop decision procedures for aspects not already covered by existing infrastructure.

Evaluate the Solution

Firstly an experimental evaluation, does the solution work on the example code you were executing. Then he meta-theory like the soundness proof.

Research Direction

Either develop now technical for new languages and feature (e.g. the recent interest in event-bases programming due to android.) or work in general infrastructure for utilise in many areas.

Frank Pfenning (Carnegie Mellon University) - Proof theory and its role in programming language research

I will show you why every PL researcher needs a bit of proof theory.

How do we write correct programs ?

We don't :)

In practice, programming and informal reasoning go hand in hand, we use mental logical assertions, e.g. after calling sort the list is sorted. It's vital to decompose the problem into part so we can reason locally.

We need to develop programming language with the logic of the programmer in mind. Think about your least favourite programming language and why the operational or logical model of the languages doesn't agree with you. Reasoning is an integral part of programming. We need to co-design the language and logic for reasoning about it.

Logic is computation so the key is to design coherent logical and operations semantics.

Examples:

Computation first...

- runtime code generation => IS4

- partial eval => temporal logic

- dead code ele => model logic

- distribution computation => IS5

- message-passing

- concurrency => linear logic

- generic effects => lax logic

... and the logics first

- lax logic => ??

- temporal logic => ??

- epistemic logic => ??

- ordered logic => ??

Key ingredients.

Understand the difference between judgements and propositions. The basic style of proof systems e.g. natural deduction, sequent calculus, axiomatic proof system and binary entailment.

Example: Hypothetical Judgement*

Example: Runtime Code Generation

We have the source expression at runtime. We distinguish ordinary variable which are bound to values and expression values which are bound to source code.*

Stephanie Weirich (University of Pennsylvania) - How to write a good research paper

Stephanie is giving the popular talk "How to write a good research paper" from Simon Peyton Jones.

Start by writing the paper. It focuses us. Write a paper about any idea no matter now insignificant it seems. Then you develop the idea.

Identify your key idea, the paper main goal is to convey your idea. Be explicit about the main idea for the paper, the reviews should need to be a detective.

The paper flow:

- Here is a problem

- It's an interesting problem

- It's an unsolved problem

- Here is my idea

- My idea works

The introduction - describe the problem and what your paper contributes towards to problem. Don't describe the problem to broadly to quickly. It's vital to nail the exactly contributions.

The related work - it belongs after the main body of the paper, not straight after the introduction. Be nice in the related work. Be honest about the weaknesses of your approach.

Use simple, direct language, putting the reader first. Listen to readers.

Damien Pous (CNRS, LIP, ENS Lyon) - Coinductive techniques, from automata to coalgebra

Checking language equivalence of finite automata

Demonstration of the naive algorithm to compare equivalence by walking through the stages and comparing. This algorithm is looking for bi simulation. This algorithm has quadratic complexity. Instead we can used HK (Hopcroft and Kerp) algorithm.

[I got a bit lost in the theory of coalgebras for the rest of the talk, sorry]*

OCaml 2014

Welcome

Many submissions this year so a few had to be squeezed into "short talk" slots.

Session 1: Runtime system

Multicore OCaml, by Stephen Dolan (presenting), Leo White, Anil Madhavapeddy (University of Cambridge)

Still work in progresss. Concurrency v. parallelism. Concurrency: for writing programs (e.g. handle 10000 connections at once). Parallelism is for performance (e.g. making use of 8 cores).

LWT and Async give good support for concurrency (monads are a bit awkward).

Direct-style threading library: vmthreads and systhreads are not very efficient.

Parallelism: sad story. Can use multiple processes with manual copying ...

Unifying the two? -- concurrent programs are easily parallelized: should we use the same primitives?Java, C# and others do but it's a bad idea. At scale, death by context-switching.

Fibers for concurrency (cheap! -- have millions) and Domains for parallelism (expensive! -- have ~#core ones)

Concurrency primitives that are proposed are non-monadic. MVar: a blocking variable. Can use MVars to define an "async" function.

Demo using a Fibonacci function: One recursive call is done using "async". Parallelize it to 48 cores -- get diminishing returns as expected, but the speedup is impressive.

Q: Do you keep spawning even when no domains are free?

A: Yes. Spawning creates a new fiber which is extremely cheap (minor allocation of 30 words).

Q: Can you manipulate domains from within the program?

A: Yes.

Multiple domains run in parallel; fibers are balanced between domains. Creating domains is heavyweight.

OCaml is very fast for immutable data and functional programs -- try to keep this unchanged. Mutability is more complicated in a multithreaded system! Use a descendant of Doligez-Leroy.

Each domain has a private minor heap; no pointers exist between minor heaps. The major heap is shared. The major heap can point into minor heaps. Can do completely independent minor collections. Shared heap: mostly-concurrent, inspired by VCGC.

C API -- some minor changes required (sed can fix most!), e.g. caml_modify(&Field(x,i), y) becomes caml_modify_field(x, i, y). Atomicity guarantees of current GC are preserved.

Status: bootstraps, but GC needs more testing, tuning and benchmarking. Bytecode only at the moment. Weak references, finalizers etc. are still missing.

Q: Are you exposing a raw memory model?

A: We'll probably settle on enforcing an ordering of stores by using a memory fence on platforms which don't support this natively.

Q: Your collector stops all of the mutator sets. Is there any way of doing collection in parallel with mutators?

A: Have collection in parallel, then stop the world to verify (?)

Q: If fiber does a blocking system call, does that block the entire domain?

A: Yes at the moment, but there are plans to change that.

Q: Can what you are doing be used in monadic libraries in LWT or Async?

A: Yes but that would probably not a good idea because users of these libraries don't assume that their code is running concurrently.

Q: Is it possible to make sure that a particular fiber is in the same or is not in the same domain as other fibers?

A: Priorities, affinities to domains etc. are very interesting and we are just starting to provide these.

Ephemerons meet OCaml GC, by François Bobot (CEA)

Memoization -- computing a function with a cache while avoiding memory leaks: if a key is not needed anymore, we want to remove the entry from the cache. Particular case: heap-allocated keys not needed anymore means not reachable (apart from the cache).

Naive solution: use traditional dictionary data structure (has table, balanced tree etc.) -- problem: no key-value combination is ever released until the function is released!

Weak pointers -- a value not yet reclaimed can be accessed via a weak pointer. GC can release a value that is only pointed to by weak pointers.

Finalizers: can attach one or several to a value. So next idea; don't use K directly to index in K. But what if K and V are the same? Gives circular dependency, and nothing ever gets released.

Next idea: keep table in key! (Haskell: can do something like that with System.Mem.Weak) But we can still get cycles.

Ephemerons (Hayes 1997): value v can be reclaimed if its key k or the ephemeron can be reclaimed.

Implementation: OCaml runtime modified (Github pull request 22). Adds a new phase, cleaning, between mark and sweep.

Can use ephemerons to implement weak tables.

Session 2: Tools and libraries

Introduction to 0install, by Thomas Leonard (University of Cambridge)

Converted 0install from Python to OCaml. Will give intro to 0install -- decentralized, cross-platform package manager -- and report his experience porting Python code to OCaml code.

0install (www.0install.net) created in 2003. Make one package that works everywhere. Packages can come directly from upstream (or from distribution); switch to latest version easily.

Example: 0install add opam http://tools.ocaml.org/opam.xml

Q: How does it deal with libraries?

A: Libraries are shared.

Porting to OCaml.

Python problems -- too slow (mobile platforms), too unreliable -- no static type checking, Python 3 trouble, PyGTK breakage...

Idea to port to a different language -- lots of languages compared (for 0install, only startup time matters)

OCaml: Bad -- top-level no readline support, syntax errors hard to find, unhelpful errors; Good -- fast!, stable, reliable.

Suggestion: OCaml for Python, Java, ... users on the website.

Porting process: used old Python and new OCaml parts together; communication via JSON. Literal translation first; refactor later in OCaml.

Results: similar LOC, 10x faster, reliable, great community!

Q: Can 0install deal with multiple versions of the same package?

A: For OCaml packages, use OPAM.

Q: How often did you run into type errors when porting to OCaml?

A: Porting the SAT solver was a little tricky. None and null are handled better in the OCaml code and all kinds of network errors are handled now.

Q: Are you still using objects?

A: Currently getting rid of objects and making things more immutable.

Transport Layer Security purely in OCaml (*), by Hannes Mehnert (presenting, University of Cambridge), David Kaloper Meršinjak (University of Nottingham)

Current state: Mirage OS -- memory safe, abstract, modular. But for security call unsafe insecure C code??

Motivation: protocol logic encapsulated in declarative functional core; side effects isolated in frontends; concise, useful, well-designed API (should be easy in comparison to OpenSSL!)

TLS: secure channel between two nodes. Most widely used security protocol family. Flexible algorithm: negotiation of key exchange, cipher and hash.

Detailed properties: authentication, secrecy, integrity, confidentiality, forward secrecy. Divided into protocol layers: handshake, change cipher spec, alert, application data, heartbeat subprotocol.

Authentication (X.509): client has set of trust anchors (CA certificates); server has certificate signed by a CA; during handshake client receivers server certificate chain; client verifies that server certificate is signed by a trust anchor.

Handshake demo using the OCaml TLS implementation.

Stats: 10kloc (compare OpenSSL 350kloc); interoperable. Missing features: client authentication, session resumption, ECC ciphersuites. Roughly 5x slower than OpenSSL. Took ~3 months to implement.

Future: implement missing features, improve performance, test suites, integration with Mirage.

More at http://openmirage.org/blog/introducing-ocaml-tls

Q: Who will use this?

A: The University of Cambridge.

C: The team that discovered the Heartbleed bug ran their test framework against this library, and found five bugs in Linux but none in this library.

Q: What about timing and interaction with GC?

A: Best practice is not to use and data-dependent branches and to use the same memory access patterns. No data dependent allocations. But the preferred way is to do this in the language.

Q: How about tests?

A: We have a test suite but we'd like to automatically generate tests and run them against other implementations to be able to do comparisons.

OCamlOScope: a New OCaml API Search (*), by Jun Furuse (Standard Chartered Bank, Singapore)

Haskell has type classes, purity, laziness where OCaml is different. But what's really different is library search: Hoogle. Can search by name, by type, or both.

OCamlBrowser, OCaml API Search are very limited so Jun built OCamlScope.

OCamlBrowser works only for locally compiled code, uses OCaml typing code. OCaml API search was based on OCamlBrowser but has been discontinued.

Previously there were many problems with trying to build an OCaml API search. Now cmt/cmti files give compiled AST with locations, and OPAM gives unified installations; compiler-libs make it easier to access OCaml internals.

OCaml Scope: remote (hosted); search by edit distance.

525k entries (values, types, constructors) -- 115 OPAM packages, 185 OCamlFind packages. Note: need to deal with two different package systems! Scraping cmt/cmti with OPAM, create module hierarchy with OCamlFind; must detect OPAM - OCamlFind package relationships.

Future work: real alias analysis (instead of ad-hoc grouping).

Find it at https://ocamloscope.herokuapp.com

Q: Do you plan to integrate this with an editor?

A: That would be nice, sure.

C: Could use something like your edit distance approach to improve error message and have the compiler give suggestions.

Session 3: OCaml News

The State of OCaml (invited), Xavier Leroy (INRIA Paris-Rocquencourt).

Today: What's new in OCaml 4.02 (September 2014)? Many new features; 66 bugs fixed, 18 feature wishes.

1. Unified matching on values and exceptions

Classic programming problem: in let x = a in b, we want to catch exceptions raised by a but not those raised by b. In OCaml 4.02, the match .. with .. construct is extended to analyse both the value of a and exceptions raised during a's evaluation.

let rec readfile ic accu = match input_line ic with | "" -> readfile ic accu | l -> readfile ic (l :: accu) | exception End_of_file -> List.rev accu

Compilation preserve tail-call optimization.

2. Module aliases

Common practice: bind an existing module to another name.

module A = struct module M = AnotherModule ... end

This binding is traditionally treated like any other definition. This can cause subtle type errors with applicative functors; accesses sometimes go through more indirections; linking problems.

In OCaml 4.02, these constructs are treated specially during type-checking, compilation and linking.

Discussion of the application of this to libraries.

3. Mutability of strings

For historical reasons, the string type is mutable in place and has two broad uses. It can be used to represent text, or as an I/O buffer (mutable). OCaml programmers usually keep these two uses distinct. But mistakes happen, and user code may be malicious.

In the Lafosec study (ANSSI), there are a few examples of OCaml code where one can mutate someone else's string literals, or make a Hashtable key disappear.

New solution by Damien Doligez: in 4.02, there are two base types -- string for text, and bytes for byte arrays. By default, the two are synonymous but incompatible if option -safe-string is given.

In default mode, all existing code compiles but get warnings when using String functions that mutate in place.

In -safe-string mode, the values of type string are immutable (unless unsafe coercions are used); I/O code and imperative constructions of strings need to be rewritten to use type bytes and library functions from Bytes.

Other novelties: explicitly generative functors; annotations over OCaml terms; external preprocessers that rewrite the typed AST (-ppx option); open datatypes, extensible a posteriori with additional constructors.

Performance improvements: more aggressive constant propagation, dead code elimination, common subexpression elimination, pattern-matching, printf (based on GADTs); representation of exceptions without arguments

New toplevel directives to query the environment; new port to 64-bit ARM; source reorganization: Camlp4 and Labltk split off the core distribution and now live as independent projects.

What's next? Bug fix release 4.02.1, then various language experiments in progress; more optimizations, ephemerons, GDB support, tweaks to support OPAM better.

Q: Safe Haskell is starting to catch on in the Haskell community.

A: "Safe strings" are a first step in this direction. Safe OCaml is definitely something we're thinking about.

The OCaml Platform v1.0, by Anil Madhavapeddy (presenting, C), Amir Chaudhry (C), Jeremie Diminio (JS), Thomas Gazagnaire (C), Louis Gesbert (OCamlPro), Thomas Leonard (C), David Sheets (C), Mark Shinwell (JS), Leo White (C), Jeremy Yallop (C); (C = University of Cambridge, JS = Jane Street)

The platform: tooling, quantitative metrics, agility to judge the impact of language changes. Ultimate goal: grow a sustainable open source community.

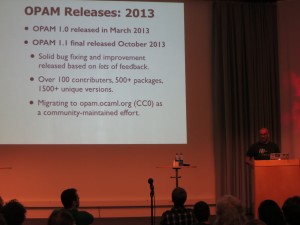

OPAM 1.2: "The Platform Release" -- solver errors are explained in plain English rather than boolean formulae, more expressive queries (reverse dependencies and recursive); can clone the source code and repo file for any OPAM package.

Steady growth in the number of released OPAM packages. Growth in number of contributors is much slower.

New workflow in OPAM 1.2 (See http://opam.ocaml.org/blog/opam-1-2-pin/) -- flexible clone, pin, configuration, sharing.

OCaml Platform? OPAM Platform! Tools built around OPAM that provide a modular workflow for developing, publishing and maintaining OCaml source code, both online and offline.

OPAM = OCaml PlAtformM!

OPAM 1.2 restructured: everything built on OpamLib. On top of that library, have "opam", "opam-publish", "opam-doc" (with opam-units) tools.

OPAM documentation -- goal: documentation unified across packages that handles cross-referencing and module inclusion well. It's hard!

Use only the Typed AST; comments are transformed into attributes in the typed AST; these are used by external tools.

Preview: http://ocaml.github.io/platform-dev a working prototype.

Timeline: Sept online release, Nov use it locally, Dec build custom website for other repositories.

Tooling: OCamlJS now supports complete compiler REPL in JS; GDB integration.

Polish: easier to package and install; binary releases on lots of platforms; documentation rewritten.

One More Thing: Assemblage -- an very alpha eDSL to describe OCaml projects. OCaml as host language with Merlin auto-completion. Can introspect the project description to generate build rules. Very lightweight, integrates easily.

Q: Do you have anything to help with ARM cross-compilation?

A: Global build "glue" still required to support cross-compilation (Assemblage will help with this)

Q: Any platform is a (false?) promise to define a set of supported libraries. What's OPAM's take on this?

A: We as the developers of the platform don't want to be the people who define what the current set of packages considered as "stable" is. We just build the tools.

Session 4: Language

A Proposal for Non-Intrusive Namespaces in OCaml, by Pierrick Couderc (I), Fabrice Le Fessant (I+O), Benjamin Canou (O), Pierre Chambart (O); (I = INRIA, O = OCamlPro)

In OCaml, we cannot use multiple modules that have the same name.

Common practice: use long names, e.g. LibA (Misc, Map) as LibA (LibA_Misc, LibA_Map).

Packs: for the developer -- no code change, simply a matter of options; user -- use path to distinguish modules. Cons: too many recompilations, dependencies, large executables!

In 4.02, we can use module aliases. Option -no-alias-deps.

Advantages: can deceive ocamldep for better dependencies and namespaces can be used transparently.

Proposed solution: namespaces and "as" imports. Can import specific modules, or all modules, or all modules except certain ones from a namespace.

Namespaces are not closed -- adding a module a posteriori is possible.

Comparison with module aliases: +extensibility, +simple build system, +better dependencies, +expressivity. But -new syntax.

Work in progress: big functors -- using packs to generate functors.

Conclusion: mechanism of namespaces integrated in the language, solves compilation issues. Working prototype for 4.02: github.com/pcouderc/ocaml_namespaces

C: Openness vs. functorization.

A: With big functors, one idea would be to close namespaces but that would not be ideal.

C: There are already module aliases and then there will be namespaces. Mixing the idea of big functors with namespaces does not seem like a good idea.

Q: How about qualified imports?

A: Interesting idea.

Q: Why do you need "in namespace" if you're already separating by directory structure?

A: It's not enough. We need to separate compilation units.

C: But directory names could be used a link time.

C: The benefits must be very clear because there already are lots of ways of importing "stuff".

Improving Type Error Messages in OCaml (*), by Arthur Charguéraud (INRIA & Université Paris Sud)

Type errors: dozens of papers, no implementation. For beginners and experts, type errors can be very frustrating.

Result: a patch to the type-checker, providing alternative error messages for ill-typed toplevel definitions.

Example: missing unit argument to read_int -- report "You probably forgot to provide '()' somewhere"; refs and bangs; missing "rec" -- report "You are probably missing the 'rec' keyword".

Example: missing else branch -- laughs from the audience about the terrible type error message.

Other common errors where the error messages were improved: subexpressions, ill-typed applications, binary operators, incompatible branches, higher-order function application, occurs check errors.

Also works for optional and named arguments.

Not included GADTs, module type checking.

Try it online: tinyurl.com/ocamleasy

Q: Why can't we have this today??

A: Need more testing, especially with higher order functions.

Github Pull Requests for OCaml development: a field report (*), by Gabriel Scherer (INRIA).

Experiment of using Github pull requests for 8 months.

Old way: Mantis.

users report bugs, request features, propose patches

sometimes a developer works on a PR

a release each year, with some bugs fixed and some new features

Serious bugs get fixed, features are mostly ignored. Patches got ignore too!

Results of the experiment: 18 new contributions (patches) from people who probably wouldn't have sent their patches in the old system, 18 new reviews! So Github attracted a new crowd of contributors.

Some Github pull requests were handled very quickly (small fixes and changes). Developer meetings help taking decisions. Pull requests are most effective during initial development: before the feature freeze (after that the team is busy with getting the release out).

Negative results: to be effective, external users should be told more about the development cycle. Github was used mostly by people used to git.

Q: Reviews and contributions?

A: 0 reviews on Mantis, 18 on Github; ~40 patches on Mantis, 18 on Github.

Q: How about switching to git?

A: Don't believe that is going to happen. There is so much information on Mantis right now! Continuous integration is nice though.

Joint Poster Session (with ML Family workshop)

Irminsule; a branch-consistent distributed library database, by Thomas Gazagnaire (C), Amir Chaudhry (C), Anil Madhavapeddy (C), Richard Mortier (University of Nottingham), David Scott (Citrix System), David Sheets (C), Gregory Tsipenyuk (C), Jon Crowcroft (C); (C = University of Cambridge)

What if you had a distributed database with git-like semantics? -- E.g. commit history

Problem: merges!

Have an Obj and a Git backend. Implementations of various persistent data structures with merge function: prefix trees, mergeable queues, mergeable ropes.

A Case for Multi-Switch Constraints in OPAM, by Fabrice Le Fessant (INRIA)

OPAM: the official way to install OCaml packages. Builds a CUDF universe with packages available for the switch, and the dependency constraints between these packages. Send the universe to the CUDF solver, then apply the solution to the switch.

Multi-switch constraints: add a switch prefix to each package name. allow dependency constraints between packages with different switch prefixes

Use cases: cross-compilation (build and host switches), multi-switch packages, all-switch commands, per-switch repositories, external dependencies, applications-specific switches.

No implementation yet -- just an idea for discussion.

LibreS3: design, challenges, and steps toward reusable libraries, by Edwin Török (Skylable Ltd.)Slides Poster.

Concepts: monads, S3 server -- proprietary Amazon service; FOSS replacements exist.

Architecture: don't choose one particular library/framework; be careful with acquiring resources: with_resource; some code in LibreS3 has been generalized (any-cache, any-http)

Found some interesting bugs in OCaml and libraries while developing LibreS3. Debugging monadic code is hard! Stack traces from monadic concurrency are incomplete.

More: goo.gl/jmFOcn

Nullable Type Inference (#), by Michel Mauny and Benoit Vaugon (ENSTA-ParisTech)

Nullable type t? includes NULL and values of type t. Provide type inference algorithm featuring HM polymorphism that statically guarantees that NULL cannot be used as a regular value (algorithm's soundness has been proved).

Nullable types are used in practice: Hack (Facebook), Swift (Apple)

Applications

Coq of OCaml, by Guillaume Claret (Université Paris Diderot)

OCaml: FP with imperative features, many libraries and programs.

Coq: mainly used as a proof language, purely functional (only terminating programs!), dependent types, limited number of libraries.

Coq to OCaml: extraction mechanism developed by Pierre Letouzey -- removes proof terms, complete.

OCaml to Coq: CFML: deep embedding -- how to do shallow embedding? How to import imperative programs? How to keep the resulting code small?

Use a monadic translation to represent imperative features in Coq.

Usage: prove formal properties on OCaml programs, augment number of Coq libraries for programming.

Effects descriptor: a set of atomic effects. Inference: infer types using the OCaml compiler, then bottom-up analysis; fixpoint for mutually recursive definitions. Then represent effects as monads in Coq.

There is one monad per descriptor of effects -- how do we compose them? Impossible in general, but doable when we restrict the shape of the monads.

Monads exist to model global references, exceptions, i/o, non-termination.

Supported language fragment: pure lambda-calculus kernel, mutually recursive functions, inductive types, records, ADTs, ...

Compilation passes: 1. import the syntax tree typed by the OCaml compiler; 2. infer effects; 3. do monadic transformation; 4. pretty-print to Coq syntax.

Challenges: generate human-readable code, support real OCaml programs, import basic libraries, support functors (not currently implemented).

Conclusions: hard to make compiler work on real examples; functors are complex!

Q: The granularity of effects matters a lot when you use this to prove properties of your OCaml code.

A: Yes.

Q: How can I use this to prove properties about recursion -- e.g. whether they are tail-recursive?

A: (?)

Q: Do you handle local references?

A: Not at this moment.

Q: You only support part of the Pervasives library. Which parts do you not support?

A: Can't currently pass effectful functions as arguments, so for example List.iter is not supported in the usual use case.

High Performance Client-Side Web Programming with SPOC and Js of ocaml (*), by Mathias Bourgoin and Emmmanuel Chailloux (Université Pierre et Marie Curie)

This is the OCaml Users and Developers Workshop 2014 in Gothenburg.Parallel OCaml? Lots of libraries. One is SPOC = Stream Processing OCaml (with OpenCL).

GPGPU programming. Two main frameworks: Cuda and OpenCL. Use different languages to write kernels (Assembly or C/C++ subset) and to manage kernels (more or less any general purpose language can be used here).

SPOC compiles to Cuda or OpenCL. It contains the Sarek DSL and a runtime.

WebSpoc: compile OCaml with SPOC and then to JS with js_of_ocaml.

Demo: Using SPOC from within a browser to do image manipulation.

WebSpoc helps develop complex web apps with intensive computations. Good for GPGPU courses.

Future work: add a JS memory manager dedicated to SPOC vectors; add bindings to WebGL.

Demo online: http://mathiasbourgoin.github.io/SPOC/tutos/OCaml2014.html

SPOC and Sarek are available in OPAM.

Q: Is there potential to run this code on the GPU of a mobile phone?

A: Not yet.

Using Preferences to Tame your Package Manager, Roberto Di Cosmo (presenting, D+I), Pietro Abate (D), Stefano Zacchiroli (D), Fabrice Le Fessant (I), Louis Gesbert (OCamlPro); (D = Université Paris Diderot, I = INRIA)

Ten years of research on package management -- EDOS and MANCOOSI, bridging research communities. Used as foundation of OPAM.

LOTS of package managers out there! Binary, source, language specific, application specific, decentralized, functional approach.

What's inside? Two sides: people maintaining packages (server side) -- maintain a coherent set of packages. Also "client side" -- fetch and authenticate metadata and packages, resolve dependencies, user preferences.

Dependency solving: installability of a single package and co-installability of a set of packages are NP-complete problems.

Application: find uninstallable packages in a repository: just call a SAT solver on each package in the repository! ("dose" library is specialized for this task)

ows.irill.org is the OPAM Weather Service -- shows which packages can be installed together.

Finding a solution is NP-complete but installing and upgrading is more demanding -- how many ways are there to install a package? Exponentially many.

Towards modular package managers. 0. stop coding a petty solver for every new component base system; 1. use a common upgrade description format; 2. provide means for expressing user choice.

User preferences: built from four ingredients. Package selectors, measurements, maximisation/minimisation, aggregation.

Example for minimisation: -count(removed) -- we want a solution where the number of removed packages is minimised.

Example profiles:

"Paranoid": -count(removed),-count(changed)

"Trendy": -count(removed),-notuptodate(solution),-count(new)

Slightly more exotic:

"Minimal system": -sum(solution,installedsize),-count(solution)

"Noah's ark": +count(solution)

"Fast bootstrap": -sum(solution,compiletime)

Repairing a broken system configuration:

"Fixup simple": -count(changed)

"Fixup trendy": -count(changed),-count(down),-notuptodate(solution)

This is all possible with OPAM 1.2! (Check opam --help, look for --criteria)

You can help: try different profiles, test expressivity of the preference language, help debug OPAM.

Conclusion: package managers are complex; a very hard part is dependency solving! Modern package managers must share common components, in particular dependency solvers and the user preference language.

Q: Does this keep track of explicitly installed packages?

A: Yes, OPAM knows which packages were installed directly (the "root set") and which were installed in order to satisfy dependencies.

Q: Why don't other package managers use this technology now?

A: Package managers are core parts of any system so people tend to be very resilient to change.

Simple, efficient, sound-and-complete combinator parsing for all context-free grammars, using an oracle (*), by Tom Ridge (University of Leicester)

The P3 combinator parsing library. Can handle all context-free grammars (CFGs). Scannerless or can use a lexer. Good theoretical basis, but slow.

Example given.

Memoized counting -- you can't do this with any other parser!

Compute actions over all good parse trees; there are exponentially many such parse trees, but this doesn't have to take exponential time!

Disambiguation: directly encode in code with using "option".

Supports modular combination, e.g. "helper parsers".

Comparison with Happy: it's faster, in some cases ridiculously so.

Long term aim: general parser, verified correctness and performance, usable in the real world.

Q: What's the use case?

A: It's extremely flexible, but slower than deterministic parsers.

ML Family Workshop

Welcome to the ML Family workshop liveblog with Leo, our guest blogger Gabriel Scherer and myself.

Welcome

Welcome, this year we worked closely with the OCaml workshop to give the two workshops they're own focus, this workshop is more theory focused then the OCaml workshop. This workshop doesn't just include features in current SML, but also features that could be included in the future. We welcome all ML's from the ML family. We will be publishing the proceeding and they will be available free online.

Session 1: Module Systems

Chair: Didier Rémy

1ML -- core and modules as one (Or: F-ing first-class modules) (Research presentation)

Andreas Rossberg

Abstract: We propose a redesign of ML in which modules are first-class values. Functions, functors, and even type constructors are one and the same construct. Likewise, no distinction is made between structures, records, or tuples, including tuples over types. Yet, 1ML does not depend on dependent types, and its type structure is expressible in terms of plain System F-omega, in a minor variation of our F-ing modules approach. Moreover, it supports (incomplete) Hindley/Milner-style type inference. Both is enabled by a simple universe distinction into small and large types.

We are rethinking the design of ML. We talk about ML as a language, its actually at least 2.5 languages. There is the core language and the module language. There's even a 3rd language of type expression. This are all syntactically different.

Who likes the type expression syntax in ML ? No-one

Modules in ML, as 2nd class. Modules are more verbose but powerful, leaving us with some difficult decisions. There has been work in packing modules as fist class values.

We want a classless society !

I want the expressive power of modules, with style.

I propose 1ML - first class modules language/ unified ML

No more staring with core language and add modules constructs. Instead we start with a module language and then add some core constructs.

Example of explicit typed 1ML: Similar to ML plus two functor types, one for pure and one for inprue. Type declaration syntax is replaced with anonymous modules.

Examples of types as modules: This common in module IL's, its not too new.

This extends to type constructors as applicative functors.

The unavoidable map functor example :)

A more interesting example: The textbook example of selection something at runtime. This could be done in OCaml but it would be much more verbose.

Example of collection to demonstrate associated types. Examples of how this can again be achieved in OCaml but its still more verbose.

Example code using higher kinded polymorphism, which couldn't be do in OCaml

I am using F-ing modules semantics and collapsing syntax and semantics.

Challenges: Decidability for subtypes, phase separation and type interface. The avoidance problem wasn't a problem at all.

Decidability: The ability to have abstract signatures can introduce decidability issues. Matching can lead in infinite sequence of substitution.

We disallow substitution an abstract types. This is also in papers from the 80's. We separate types into polytypes and monotypes. Only small types can have type type'.

Example of F'ing types definition for large and small types.

Phase separation: Not a problem

Type inference: We will only infer small types, so annotations can be omitted. We can recover ML style polymorphism with implicit functions.

Revisiting the Map example with all the type annotations.

Inference and subtypes: Subtyping relations almost degenerates to type equivalence on small types.

Incompleteness: Subtyping allows width subtyping, Include is a form of record concatenation and value restriction inside functors.

SML can't infer any of this either !

F-ing modules is almost a first class module language. Truly first-calls modules are perfectly doable without regressing the language. Isolate the essence of ML's core/modules.

Future: Generalise applicative functors, Generalise implicit functors (aka type classes ?), Row polymorphism, more sophisticated inference for first class polymorphism, recursive modules. This + MixML nit be like Scala.

Q: Is predicativity something we might regret in the future ?

A: We have pack like construct for first class modules

Q: The last 10 years we have moved towards dependant types, you've seemed to move the opposite direction ?

A: Interesting question

Type-level module aliases: independent and equal (Research presentation)

Jacques Garrigue (Nagoya University); Leo White (University of Cambridge)

Abstract: We prom ote the use of type-level module aliases, a trivial extension of the ML module system, which helps avoiding code dependencies, and provides an alternative to strengthening for type equalities.

ote the use of type-level module aliases, a trivial extension of the ML module system, which helps avoiding code dependencies, and provides an alternative to strengthening for type equalities.

Type-level module aliases - a simple feature in OCaml 4.02

Writing ```module S = String``` in signature will alias S to string.

Introducing the new inferred type-level module aliases and how this extending the subtyping relation.

The concept of type-level modules alias appears first in Traviatta (ICFP '06), used to allow type interface of recursive modules.

Later we discovered that type-level modules aliases were a good match for OCaml-style applicative functors.

Helping applicative functors was probably not enough to justify a new feature. However remember that the original goal was to simplify program analysis.

Modules as name spaces: Sharing of types allow grafting a modules somewhere else in the hierarchy.

This idea only working in theory: for separate compilation things backtrack. We have a risk of name conflict in are libraries as forest of modules. -pack is monolithic, using modules as name spaces creates large interfaces (e.g. JS core).

How to other language handle this issue: SML using a compilation manage which use special files using a dedicated syntax.

Example of manual packing

Now adding type-level module alias. We do not need to duplicate the module signatures.

Induced dependencies - a new compilation flag -no-alias-deps for 4.02.

3 Steps:

- Create a mapping unit whose role is only to map short names to prefixed names

- open this unit

- create an export unit

Compared to using -pack, this approach requires rename source files to unique name and add an open statement.

In core/async this divides the size of compiled interfaces by 3,

Currently, type level modules aliases can be created only for a limited subset of module paths, this excludes feature like functor applications, opaque coercions, functor arguments and recursive modules.

C: This also reduces required false dependences.

Q: What's the meta theory of this ?

A: This wasn't part of this work but were working into normalisation in the OCaml compiler

C: We are also using kinda thing for backpack in Haskell

Q: Currently we use ocamldep, what will happen to this tool ?

Session 2: Verification

Chair: Anil Madhavapeddy

Well-typed generic smart-fuzzing for APIs (Experience report)

Thomas Braibant (Cryptosense); Jonathan Protzenko; Gabriel Scherer (INRIA)

Abstract: In spite of recent advances in program certification, testing remains a widely-used component of the software development cycle. Various flavors of testing exist: popular ones include unit testing, which consists in manually crafting test cases for specific parts of the code base, as well as quickcheck-style testing, where instances of a type are automatically generated to serve as test inputs.

These classical methods of testing can be thought of as internal testing: the test routines access the internal representation of the data structures to be checked. We propose a new method of external testing where the library only deals with a module interface. The data structures are exported as abstract types; the testing framework behaves like regular client code and combines functions exported by the module to build new elements of the various abstract types. Any counter-examples are then presented to the user. Furthermore, we demonstrate that this methodology applies to the smart-fuzzing of security APIs.

I work for a start-up, work on hardware security models (HSM). Some hackers have been about to steal lots of money due to issues with HSM.

Cryptosense Workflow

Testing -> Learning -> Model-checking -> ...

We will focus on Testing, we automatically test API's using QuickCheck (a combinator library to generate test case in Haskell), But to generate tests we need to know how to generate our types/data structures.

ArtiCheck - a prototype library to generate test cases for safety properties. Comparison of QuickCheck of ArtiCheck.

Types help us to generate good random values, API's help generate values that have the right internal invariants.

Well-typed does not mean well-formed so we still need fuzzing.

Describing & Testing API's, we are only considering on first order functions.

Example of how we would test an example of API for red black trees.

Introducing a toy DSL for describing API's: describing types, functions and signatures.

There are many ways to combine these functions. so we introduce a richer DSL for types.

Field Report: Cryptosense

We need to enumerate a big combinational space made of constants and variables. We want good coverage and generate templates in a principle manor.

We have a library of enums with a low memory footprint with efficient access.

We have another DSL for describing combinatorial enumeration.

Example test results from a HSM. We have 10^5 test in 540 secs.

We have a principled way to test persistent APIs.

Writing a generator for testing BDD would be very tough without solutions like this.

Come work for Cryptosense :)

Q: How long does it take run the data on a real HSM ?

A: the numbers show actually already include already, we dynamically explore the state space.

Q: How do you trade-off coverage of API to coverage of state space ?

A: You a systematic approach. e.g. constantly generate trees which are bigger and bigger, so you don't just regenerate empty trees.

Q: Can you find a simple counterexample like QuickTest ?

A: Its not currently a function but maybe we can add a function to find an example smaller that exhibit the same behaviour

Q: Is this open source ?

A: Yes, the prototype is on github

Improving the CakeML Verified ML Compiler (Research presentation)

Ramana Kumar; Magnus O. Myreen (University of Cambridge); Michael Norrish (NICTA); Scott Owens (University of Kent)

Abstract: The CakeML project comprises a mechanised semantics for a subset of Standard ML and a formally verified compiler. We will discuss our plans for improving and applying CakeML in four directions: optimisations, new primitives, a library, and verified applications.

Introducing the CakeML team, we are verification people.

Why ML for verification: its clean and high level, its easy to reason about so can we then formally reason about it.

CakeML: a subset of ML which is easy to reason about, with verified implementation and demonstrate how easy it is to generate verified cakeML

How can we make proof assistant into trustworthy and practical program development platforms.

functions in HOL -> CakeMl -> Machine code

2 part to this talk:

- verified compiler

- making the compiler better

Highlights of CakeML compiler:

Most verified compiler work from source code to AST to IR to bytecode to Machine code. We take a difference approach and can do both dimensions in full.

The CakeML language, its SML without IO and functors. Since POPL '14 we've added arrays, vectors, string etc..

Contributions of POPL '14 paper: specification, verified algorithms, divergence preservation and bootstrapping. Proof developer where everything fits together.

Numbers: its slower, our aim is to be faster then interpreted OCaml.

The compiler phases are simple, we use only a few hops from IR to x86. Bytecode simplified proofs of real-eval-loop but made optimisation impossible.

We are now add many more IR's to optimise, like common compilers. Currently we only have x86 backend but we are adding ARM etc..

C: Inlining and specialisation of recursive functions are the key optimisations that you should focus on

C: You could use your compiler (written in cakeML) to evaluate the value of various optimisations

Q: Why don't you support functors ?

A: Its not a technical issue, this just a lot of work and its at the top of the priorities stack.

C: Your runtime value representation could make a big performance issues

C: Can you eliminate allocation in large blocks

Session 3: Beyond Hindley-Milner

Chair: Jacques Garrigue

The Rust Language and Type System (Demo)

Felix Klock; Nicholas Matsakis (Mozilla Research)

Abstract: Rust is a new programming language for developing reliable and efficient systems. It is designed to support concurrency and parallelism in building applications and libraries that take full advantage of modern hardware. Rust's static type system is safe and expressive and provides strong guarantees about isolation, concurrency, and memory safety.

Rust also offers a clear performance model, making it easier to predict and reason about program efficiency. One important way it accomplishes this is by allowing fine-grained control over memory representations, with direct support for stack allocation and contiguous record storage. The language balances such controls with the absolute requirement for safety: Rust's type system and runtime guarantee the absence of data races, buffer overflows, stack overflows, and accesses to uninitialized or deallocated memory. In this demonstration, we will briefly review the language features that Rust leverages to accomplish the above goals, focusing in particular on Rust's advanced type system, and then show a collection of concrete examples of program subroutines that are efficient, easy for programmers to reason about, and maintain the above safety property.

If time permits, we will also show the current state of Servo, Mozilla's research web browser that is implemented in Rust.

Motivation: We want to implement a next gen web browser servo

See the rusty playpen online http://www.rust-lang.org/

We want a language for systems programming, C/C++ dominate this space. We want sound type checking. We identify classic errors and using typing to fix them.

Well-typed programs help to assign blame.

Systems programmers want to be able to predict performance.

Rust syntax is very similar to OCaml. Types have to explicit on top-level functions. Rust has bounded polymorphism, we don't have functor, this is more similar to typeclasses.

Value model between OCaml and Rust is very different. Rust in-lines storage but must pick the size of the largest variant.

To move or copy ? Move semantics

The Copy bound expresses that a type is freely copyable and its checked by the compiler.

Many built-in types implement Copy

Why all the fuss about move semantics ?

Rust has 3 core types: non-reference, shared reference, mutable unaliased reference. (plus unsafe pointers etc..)

Rust patch matching moves stack slots. Rust introduces ref pattens and takes references to its interioa.

This is all more type soundness

Q: Is borrowing first order ? Can I give to someone else ?

A: Yes, I'll explain more later

Lifetimes: simulator to regions used by Tofte/Talpin

Example of sugar and de-suger forms of functions: how we can explicitly constrain lifetimes

Is every kind of mutability forced into a linearly passed type ?

Not really, there's inherited and interior mutability.

Rust has closures

Q: Does rust repeat earlier work on cyclone ?

A: Cyclone tried to stay very close to C but we more then happy to diverge from C/C++

Q: Large scale systems code becomes tanted with linearity, how do you deal with that ?

A: You can break out of it, only very occationally need to break into unsafe code

Q: How do you know that your type system is safe ?

A: There's ongoing work

Doing web-based data analytics with F# (Informed Position)

Tomas Petricek (University of Cambridge); Don Syme (Microsoft Research Cambridge)

Abstract: With type providers that integrate external data directly into the static type system, F# has become a fantastic language for doing data analysis. Rather than looking at F# features in isolation, this paper takes a holistic view and presents the F# approach through a case study of a simple web-based data analytics platform.

Experiments can have a life of its own independent of a large-scale theory. This is a relevant case study, theory and language independent and demonstrates a nice combination of language features

Demo: demo using type providers and translation from F# to JS.

Features used: type providers, meta-programming, ML tyoe inference and async workflows.

JS Intergration: questions on whether to use JS or F# semantics.

Asynchronous workflows - single thread semantics

Not your grandma's type safety,

Q: These language feature are good for specific application, do they make the language worse for other features ?

A: No the extensions use minimal syntax

Q: Is there version contrainting on type providers for data source ?

A: No

The rest of the posts will follow soon

Session 4: Implicits

Chair: Andreas Rossberg

Implicits in Practice (Demo)

Nada Amin (EPFL); Tiark Rompf (EPFL & Oracle Labs)

Abstract: Popularized by Scala, implicits are a versatile language feature that are receiving attention from the wider PL community. This demo will present common use cases and programming patterns with implicits in Scala.

Modular implicits (Research presentation)

Leo White; Frédéric Bour (University of Cambridge)

Abstract: We propose a system for ad-hoc polymorphism in OCaml based on using modules as type-directed implicit parameters.

Session 5: To the bare metal

Chair: Martin Elsman

Metaprogramming with ML modules in the MirageOS (Experience report)

Anil Madhavapeddy; Thomas Gazagnaire (University of Cambridge); David Scott (Citrix Systems R&D); Richard Mortier (University of Nottingham)

Abstract: In this talk, we will go through how MirageOS lets the programmer build modular operating system components using a combination of functors and metaprogramming to ensure portability across both Unix and Xen, while preserving a usable developer workflow.

Compiling SML# with LLVM: a Challenge of Implementing ML on a Common Compiler Infrastructure (Research presentation)

Katsuhiro Ueno; Atsushi Ohori (Tohoku University)

Abstract: We report on an LLVM backend of SML#. This development provides detailed accounts of implementing functional language functionalities in a common compiler infrastructure developed mainly for imperative languages. We also describe techniques to compile SML#'s elaborated features including separate compilation with polymorphism, and SML#'s unboxed data representation.

Session 6: No longer foreign

Chair: Oleg Kiselyov

A Simple and Practical Linear Algebra Library Interface with Static Size Checking (Experience report)

Akinori Abe; Eijiro Sumii (Tohoku University)

Abstract: While advanced type systems--specifically, dependent types on natural numbers--can statically ensure consistency among the sizes of collections such as lists and arrays, such type systems generally require non-trivial changes to existing languages and application programs, or tricky type-level programming. We have developed a linear algebra library interface that guarantees consistency (with respect to dimensions) of matrix (and vector) operations by using generative phantom types as fresh identifiers for statically checking the equality of sizes (i.e., dimensions). This interface has three attractive features in particular.

(i) It can be implemented only using fairly standard ML types and its module system. Indeed, we implemented the interface in OCaml (without significant extensions like GADTs) as a wrapper for an existing library.

(ii) For most high-level operations on matrices (e.g., addition and multiplication), the consistency of sizes is verified statically. (Certain low-level operations, like accesses to elements by indices, need dynamic checks.)

(iii) Application programs in a traditional linear algebra library can be easily migrated to our interface. Most of the required changes can be made mechanically.

To evaluate the usability of our interface, we ported to it a practical machine learning library (OCaml-GPR) from an existing linear algebra library (Lacaml), thereby ensuring the consistency of sizes.

SML3d: 3D Graphics for Standard ML (Demo)

John Reppy (University of Chicago)

Abstract: The SML3d system is a collection of libraries designed to support real-time 3D graphics programming in Standard ML (SML). This paper gives an overview of the system and briefly highlights some of the more interesting aspects of its design and implementation.

Closure

and we headed of to the ICFP Industrial Reception ...

ICFP 2014: Day 3

Good Morning from the 3rd and final day of the 19th International Conference on Functional Programming, as ever Leo and I (Heidi) are here to bring you the highlights.

Keynote (Chair: Edwin Brady)

Depending on Types

Stephanie Weirich (University of Pennsylvania

Is GHC a dependently type PL ? Yes*

The story of dependently typed Haskell ! We are not claiming that every Agda program could be ported to Haskell

Example: Red-black trees (RBT) from Agda to haskell

All the code for today's talk is on github: https://github.com/sweirich/dth

Example of insertion into RBT from Okasaki, 1993.

How do we know that insert preserves RBT invariants ?

We are going to use types

Examples RBT in Agda from Licata. We can now turn this into GHC using GADT's and datatype promotion.

Haskell distinguishes types from terms, Agda does not.

In Haskell types are erased at runtime unlike adge.

Datatype Promotion: Recent extension to GHC, we can't promote things like GADT's though.

GADT's: introduced to GHC 10 years ago, the really challenge was integration with Hindley-Milner type inference

Silly Type Families - GADT's from 20 years ago

Our current insert to RBT needs to temporary suspect the invariances for RBT, but how can we express this in the haskell type scheme ?

Singleton Types: Standard trick for languages with type-term distinctions.

The difference between Agda and haskell: Totality

What's next for GHC ?

Datatype promotion only works once

Wishlist

- TDD: Type-driven development (as described by norman yesterday)

- Totality checking in GHC

- Extended type inference

- Programmable error messages

- IDE support - automatic case splitting, code completion and synthesis

GHC programmers can use dependent type*

Q: Why not use incr in the definition to unify the two constructors ?

A: You can, I was just simplifying for explanation

Q: Its seems like this increase cost, is this the case ?

A: Yes, but not too much

Q: Are dependent types infectious ?

A: Good questions, no we can add a SET interface to this RBT to abstract

Q: To what extend is Haskell the right place for these new features ?

A: Its a great testbed for industrial scale and we can take lessons back into dependently typed

Q: Can you do this without type families ?

A: Yes, I wanted show type families

Q: Why not just use Agda ?

A: Haskell has large user base, significant ecosystems, 2 decades of compiler dev

Q: What's the story for keeping our type inference predictable ?

Q: What level of students could use these ideas ?

A: I've used in advanced programming for seniors

Session 10: Homotopy Type Theory (Chair: Derek Dreyer)

Homotopical Patch Theory

Carlo Angiuli (Carnegie Mellon University); Ed Morehouse (Carnegie Mellon University); Daniel Licata (Wesleyan University); Robert Harper (Carnegie Mellon University)

Idea: define a patch theory within Homotopy Type Theory (HoTT)

Functorial semantics: define equational theories as functors out of some category. A group is a product-preserving functor G -> Set

Homotopy Type Theory: constructive, proof-relevant theory of equality inside dependent type theory. Equality proofs a = b are identifications of a with b

Can have identifications of identifications.

Higher Inductive Types introduce non-sets: arbitrary spaces.

Patch Theory defines repositories and changes in the abstract -- model repositories, changes, patch laws governing them.

Key idea: think of patches as identifications!

Bijections between sets X and Y yield identifications X = Y.

Example: repository is an integer; allow adding one. Everything works out nicely.

Next example: repository is a natural number; allow adding one. Problem: can't always subtract one (which would be the inverse of adding one)! -- solved using singleton types.

Closing thoughts: computation vs. homotopy. There is a tension between equating terms by identifications and distinguishing them by computations. Analogy: function extensionality already equates bubble sort and quicksort -- they are the same function but different programs. Computation is finer-grained than equality.

C: Very understandable talk! But I still need to see a talk / paper where Homotopy Type Theory guides me to something new.

A: The functorial part comes for free within HoTT. Also we're forced to do everything functorially.

Q: Patch computation -- does your theory say anything about that?

A: Didn't work out nicely.

Q: Can you give me some intuition about the singleton type used in the naturals example?

A: This is a hack. We want a directed type that does not have symmetry.

Q: What about higher layers?

A: Don't need to go further than the second layer when dealing with Sets.

Pattern Matching without K

Jesper Cockx (KU Leuven); Dominique Devriese (KU Leuven); Frank Piessens (KU Leuven)

Pattern matching without K

Dependent pattern matching is very powerful, but HoTT is incompatible with dependent pattern matching. The reason: it depends on the K axiom (uniqueness of identity proofs), which HoTT does not allow. But not all forms of dependent pattern matching depend on the K axiom!

Dependent pattern matching. Have a dependent family of types -- example of pattern matching given with dependent pattern matching and without.

K is incompatible with univalence.

How to avoid K: don't allow deleting reflexive equations, and when applying injectivity on an equation c s = c t of type D u, the indices u should be self-unifiable.

Proof-relevant unification: eliminating dependent pattern matching. 1. basic case analysis -- translate each case split to an eliminator; 2. specialization by unification -- solve the equations on the indices; 3. structural recursion -- fill in the recursive calls.

Instead of McBride's heterogeneous equality, use homogeneous telescopic equality.

Possible extensions: detecting types that satisfy K (i.e. sets -- allow deletion of "obvious" equations like 2 = 2); implementing the translation to eliminators (this is being worked on or is done but not yet released); extending pattern matching to higher inductive types.

Conclusion: by restricting the unification algorithm, we can make sure that K is never used -- no longer have to worry when using pattern matching for HoTT!

Q: What was your experience contributing to Agda?

A: Not a huge change -- not too hard.

Q: Is there a different way of fixing the "true = false" proof?

A: K infects the entire theory.

Q: You said the criterion is conservative. Have you tried recompiling Agda libraries? What did you observe?

A: 36 errors in the standard library, 16 of which are easily fixable, 20 are unfixable or it is unknown how to fix them.

Q: Is K infectious?

A: Yes -- when activating the "without K" option, you cannot use a library that defines something equivalent to K.

Session 11: Abstract Interpretation (Chair: Patricia Johann)

Refinement Types For Haskell

Niki Vazou (UC San Diego); Eric L. Seidel (UC San Diego); Ranjit Jhala (UC San Diego); Dimitrios Vytiniotis (Microsoft Research); Simon Peyton-Jones (Microsoft Research)

Refinement Types: types with a predicate from a logical sub-language

can be used to give functions preconditions

Example: division by zero.

ML, F#, F* have had refinement type implementations but they're CBV! How can this be translated to Haskell?

CBV-style typing is unsound under call-by-name evaluation.

Encode subtyping as logical verification condition (VC)

Under call-by-name, binders may not be values! How to encode "x has a value" in CBN? Introduce labels to types (giving stratified types): label values that provably reduce to a value.

How to enforce stratification? -- Termination analysis! Can use refinement types for this.

Can check non-terminating code. (collatz example)

How well does it work in practice? LiquidHaskell is an implementation of a refinement type checker for Haskell.

Evaluation: most Haskell functions can be proved to be terminating automatically.

Q: What's a stratified algebraic data type?

A: It's an ADT with a label.

Q: How do you prove termination of structurally recursive functions?

A: Map to numbers (e.g. list to length)

Q: Refinement types for call-by-push-value?

A: There is some work on refinement types which does not depend on the evaluation strategy.

Q: In the vector algorithm algorithm library, every function had to be annotated.

A: Here the first argument usually increases rather than decreasing so had to write a custom metric but this was simple.

Q: How does this interact with other features of Haskell like GADTs?

A: LiquidHaskell works on Core so there should be no problem.

Q: Did you find any bugs in your termination analysis of different libraries?

A: Yes a tiny bug in Text, has been fixed now.

A Theory of Gradual Effect Systems

Felipe Bañados Schwerter (University of Chile); Ronald Garcia (University of British Columbia); Éric Tanter (University of Chile)

Programs produce results and side-effects. Can use type and effect systems to control this. Here, functions have an effect annotation -- a set of effects that the function may cause. Expressions are also annotated with their effects.

Get into trouble when passing an effectful function to another function: as a safe approximation, the higher-order function must always assume that the function passed in may have any possible effect.

Introduce "unknown" effect (upside-down question mark). Combine this with a generic type-and-effect system which comes with "adjust" and "check" funtions.

Use abstract interpretation to lift adjust and check from static to gradual typing. Idea: extend the domains of sets to include "unknown" side-effects.

Define concretization function from consistent privileges to the feasible set of effect sets.

Introduce internal language: with "has" and "restrict" constructs.

Future work: implementation (currently targeting Scala); Blame

Q: Can you always derive the concretization function?

A: It is provided by us.

Q: If you're doing dynamic checking and dealing with arrays, but you can't raise errors -- what are you supposed to do?

A: You don't want to produce a side effect when you're not allowed to do so.

Q: Does your system allow parameterization on effect sets?

A: No, not in this paper. The implementation requires it though so this is future work.

Session 12: Dependent Types (Chair: Ulf Norell)

How to Keep Your Neighbours in Order

Conor McBride (University of Strathclyde)

Conclusion first: Push types in to type less code!

A very engaging and amusing talk. Conor demonstrates (with lots of Agda code!) how to use types to build correct binary heaps and 2-3 trees.

Take-aways: Write types which push requirements in. Write pograms which generate evidence.

Q: What does "push requirements in" mean?

A: Start from the requirements. The information that's driving the maintenance of the requirements is passed in. (?)

A Relational Framework for Higher-Order Shape Analysis

Gowtham Kaki (Purdue); Suresh Jagannathan (Purdue)

Shape analysis in functional languages: ADTs track shapes of data. Types can document shape invariants of functions -- but we want to be able to refined these constraints!

Can we refine ML types to express and automatically verify rich relationships? Need a common language to express fine-grained shapes. Build up from relations and relational algebra. Example: in-order and forward-order.

Relational operators are union and cross

Predicates are equality and inclusion

But: relational types for polymorphic and higher-order functions must be general enough to relate different shapes at different call sites. Examples: id, treefoldl : a tree -> b -> (b -> a -> b) -> b

Consider id : a-> a -- shape of argument is the shape of its result.

Functions can be parameterized over relations. Also get parametric relations: relations can be parameterized over relations.

We consider the "effectively propositional fragment" of many-sorted first-order logic. Key observation for translation: a fully instantiated parametric relation can be defined in terms of its component non-parametric relations.

Implementation: CATALYST, tycon.github.io/catalyst/

Q: Does your type system allow the user to introduce new relations?

A: Yes.

Q: Can you compile any kind of parametric relation or are there restrictions?

A: You can also write relations on infinite data structures.

Session 13: Domain Specific Languages II (Chair: Yaron Minsky)

There is no Fork: an Abstraction for Efficient, Concurrent, and Concise Data Access

Simon Marlow (Facebook); Louis Brandy (Facebook); Jonathan Coens (Facebook); Jon Purdy (Facebook)

Imagine you a web program, the server side code for generating a webpage, with many sources. For efficient, we need concurrency, batching, caching. We could brute force, but that destroys modularity and we its messy.

What about using explicitly concurrency ? It really on the programmer too much e.g. they forget to fork, add false dependancies.

A rule engine: input, evaluate rules and return.

Consider coding a blog, example code including Fetch monad. The concurrency is implicit in the code, just use monand and applicative.

No manual batching, again implicit in code.

Demonstrating code for map and applicative. The monadic bind gives a tree of dependencies for IO.

This was developed for the haxl project at FB, migrating from an in-house DSL called FXL to Haskell. The code framework is open-source, github.com/facebook/haxl and cabal install haxi.

Implicit parallelism is hard but data fetching doesn't suffer from this problem.

Q: Is binder linear in depth of blocked requests ?

A: Take it offline

Q: Is there accidental sequential code ?

A: We haven't rolled it out yet

Folding Domain-Specific Languages: Deep and Shallow Embeddings (Functional Pearl)

Jeremy Gibbons (University of Oxford); Nicolas Wu (University of Oxford)

Introducing a trivial toy language for examples. Defined a simple deep and shallow embedding for the toy language. Shallow embedding must be compositional. The toy language have eval and print, print is messy to add to shalow embedding.

Dependent interpretations is introduced for the deep embedding and again is ok to shallowing embedding but not great. Context senstive interpretation can again be expressed as a fold.

Deep embedding are ADT's, shallow embeddings are folds over that AS.

Q: Has you method for shallow embedding sacrificed extensibility ?

A: Yes but we can work around it

Q: What about binders, what about recursion ? You example is too simple.

A: Not sure

Session 14: Abstract Machines (Chair: David Van Horn)

Krivine Nets

Olle Fredriksson (University of Birmingham); Dan Ghica (University of Birmingham)

We're good at writing machine-independent good. But how about architecture independence? (CPU, FPGA, distributed system).

Work: underlying exeution mechanism for suporting node annotations t @ A.

Approach: put your conventional abstract machines in the cloud.

Krivine net = instantiation of a network model with Distributed Krivine machine. Network model: synchronous message passing and asynchronous message passing.

Basic idea of execution: remote stack extension.

Krivine nets perform much better than previous approaches (GOI and GAMC).

Future: work on runtime system -- failure detection and recovery, may need GC.

Implementation and formalization: bitbucket.org/ollef

Q: How about more complex data like arrays which are expensive to send over the network?

A: We're working on this -- introduce a notion of mobile and immobile.

Distilling Abstract Machines

Beniamino Accattoli (University of Bologna); Pablo Barenbaum (University of Buenos Aires); Damiano Mazza (Université Paris 13)

Setting: lambda-calculus. There has been a lot of research into the relationship between abstract machines and substitution. We introduce the Linear Substitution Calculus which decomposes beta-reduction. It is equipped with a notion of structural equivalence.

Origins: proof nets: a graphical language for linear logic. Multiplicative cut (symmetric monoidal closed category), exponential cut (for every A, !A is a commutative comonoid)

Introduce Linear Substitution Calculus -- proof nets in disguise. Have multiplicative and exponential step.

Then define reduction strategies on it: by name / by value (LR / RL) / by need require a specific form of evaluation context together with specific versions of the multiplicative and the exponential step.

Distilleries: choice of deterministic multiplicative and exponential evaluation rule and an equivalence relation which is a strong bisimulation; an abstract machine with transitions defined by the chosen rules; a decoding function. Moral: the LSC is exactly the abstract machnie "forgetting" the commutative transitions. In all cases, distillation preserves complexity.

Q: What does the fourth possible combination of multiplicative and exponential evaluation correspond to (both by value)?

A: That would be a sort of call-by-value, depending on how you define the context.

Q: Can you bring some order to the seemingly unrelated evaluation contexts?

A: With these contexts, you tell the machine where to look for the next redex. You can even do non-deterministic machines. At some point, your choice is limited if you want execution to be deterministic.

Q: Are these evaluation contexts maximally liberal while staying deterministic?

A: Yes.

Q: From reduction semantics to an abstract machine is a mechanical process. Is it possible to find an algorithm that generates distilleries?

A: I can do it by hand ... don't know if there is an algorithm.

Student Research Competition Award Presentation

14 submissions; 13 accepted (7 grad, 6 undergrad); 3 winners for each category.

ICFP 2015 Advert & Closing