Liveblogging OCaml Workshop 2013

I'm here bright and early at the OCaml 2013 workshop at ICFP with Heidi Howard, Leo White, Jeremy Yallop and David Sheets! Â There's a packed session today with lots of us presenting, so we'll swap over editing this post as the day progresses. Â I also need to pop out to attend a panel on the future of Haskell so I'll be missing the bindings session, sadly!

I'm here bright and early at the OCaml 2013 workshop at ICFP with Heidi Howard, Leo White, Jeremy Yallop and David Sheets! Â There's a packed session today with lots of us presenting, so we'll swap over editing this post as the day progresses. Â I also need to pop out to attend a panel on the future of Haskell so I'll be missing the bindings session, sadly!

Accessing and using weather data in OCaml

Hez Carty from MDA Information Systems on doing large-scale weather data processing.

He started with OCaml in graduate school and was looking for something better than Python, whereas they are building systems that need to run for many years with high stability. Â He's not from a formal CS background but from the physical sciences, and found that the static typing made it easy to explore the language features. Â The native code output is also important, and he finds predictability very important in day-to-day engineering. Â The simple FFI also made a big difference.

They do weather data retrieval and use HTTP/zeromq as a message bus to do language interop, and a workflow management tool to handle dependency graphs. Â They take weather models frmo all these sources, and do various analyses (principal component analysis, probability models for precipitation via Monte Carlo simulations with a first order Markov chains). Â More generally, there is a bunch of processing for climatology and data extraction and insertion.

A NAM forecast plots the geo data of the USA, and overlays semitransparent layers (e.g. clouds) that are stacked up to get a fake satellite view of the earth, and then there are simulated radar returns where the model (e.g.) rains. Â In addition to the structured formats, there is raw streaming data from sensor stations that send back raw binary data such as precipitation events, which is written as a small OCaml library to parses them into more structured formats.

The big data formats are HDF4 and GRIB, which are binary data formats. There are mature C libraries to parse them and lots of existing analysis tools (e.g. wgrib which can extract lat/longs from a big file). Â However, the tools arent great for doing larger scale analysis, as shelling out all the time for thousands of points is not ideal -- therefore they bind to OCaml to do the analysis in-house.

The GRIB bindings use int, float and float arrays and bindings are entirely hand-written <i>(I'm looking forward to the ctypes talk! --anil)</i>. Â They expose only an OCaml friendly interface that is safe for callers. Â The speaker now shows several nice graphs from CloudSat tracks from earth orbit, and how they take intersecting profiles to deduce things about what multiple satellites are seeing.

They're mixing lots of different tools and satellite sources -- derived information from teleconnections (air surface pressure and how it varies in terms of oscillations between the north and south Atlantic). Â All the analysis is written in OCaml, and he had to work with several scientists who didn't have access to their initial source code in many cases, so the experience of working with his fresh system was very positive.

Challenges:

- standard issues when interfacing with C (type mismatches between C and OCaml in particular, and the question of sharing vs copying). Â The worst failure mode is silent failure with bad data, which leads to bad science!

- "Getting used to the functional way of life" can tough, as is knowing when to balance elegance with the speed benefits of not copying hundreds of megabytes of data around can be tough.

- Development environments other than Emacs and Vim are poorly supported.

The overall project has been very successful, and the bindings for HDF4/GRIB API bindings have been used to process 1TB+/day for over 5 years now. Â It's based on Bigarray and mmap, and the FFI makes getting up and running quickly.

He finds OPAM with local repositories fantastic, and used GODI and odb/oasis-db before that.

Q: how did he do the cool plots?

A: plplot bindings on github

Q: Anil: did you move from Fortran

Q: Nate Foster: terabytes of data makes me wonder about GC?

A: going through and folding over data generates huge amount of garbage and slows things down considerably. If the project is one-off, he tweaks the GC or manually call the GC more often. Really depends on length of code.

Q: The data processed is huge, so what was the parallel computation approach?

A: The task distribution system doesn't need a lot of parallelism, as he can work on one file at a time or chunk the files manually and aggregate the results. Â He's looking forward to doing some semi-shared memory parallelism.

Q: Pierre Chambart: Is the numerical code in OCaml or C?

A: LAPACK in OCaml, but the Monte Carlo is pure OCaml and interpolation is in pure OCaml too. Â Unless speed is absolutely required, it's written in OCaml.

Frenetic Network Controller

Arjun Guha about their multiyear project to program software define networks. When he started on this, he thought that networks are just a series of tubes! The reality is very different though, as there are numerous intermediate boxes (firewalls, routers, wireless auth). The real problem is that each of these intermediate boxes runs specialized, vendor-specific software and are configured independently via some proprietary CLI. They're difficult to configure and difficult to statically reason about.

SDN helps with this by introducing standardized programmable that can deploy in-network features, and a logically centralized controller (a big beefy server) that enables reasoning about whole-network behaviour. SDN is a hot topic right now (lots of commercial startups, 200+ people at HotSDN, generally very busy).

There are several Openflow controllers out there: Java (Floodlight), Python (Pox), C++ (Nox) and Haskell (Nettle), but Arjun introduces a novel OCaml programmable switch!

Openflow works via a controller "faulting" in new packets that are routed to the controller when unknown packets show up on the wire. The source code consists of a generic Openflow packet parser (its a binary protocol), and a generic packet parser (e.g. TCP/IP) and a routing engine.

First library: ocaml-packet that deals with deserializing TCP/IP/ARP/ICMP/Ethernet/802.1Q, and only depends on cstruct by Anil et al. (shows an example of a C struct for IPv4), and the nice thing that they get network byte order for free (thanks to Pierre Chambarts ocplib-endian of course).

ocaml-openflow uses serialization for OpenFlow 1.0 and 1.3, and is based on a (cleaned up) fork of the mirage-openflow library, using ideas from Nettle about to structure the code. OpenFlow 1.3 is less complete currently but is still quite usable.

The runtime systems for OpenFlow 1.0 and 1.3 listens for TCP connections from switches, and does the OpenFlow handles and keeps connections to switches alive.

The source code uses Lwt and so exposes the monad to external users, e.g.:

type t val connect : Lwt_unix.file_descr -> t option Lwt.t

They have a tutorial for non-OCaml hackers, and found teaching OCaml, Openflow AND Lwt was too much for new users. Therefore they built a new controller called Ox that hides much of the details of Lwt threading. It still uses them internally (Lwt, ocaml-openflow) but is inspired by Ox.

Therefore they can build simple openflow tutorials using Ox, and advanced controller such as Frenetic can be built directly using the low level libraries. Good for beginners and weathered OCaml hackers alike!

The problem with the controller seeing every bit of data is that it gets flooded by packets if it doesnt insert rules into the switches itself. Every switch has a flow table that has priorities, patterns and actions, and tries to take action without bothering the controller. At start of day, the controller does see everything, but eventually flows settle down. The problem they are solving is avoiding having two copies of the program on both the controller and the switch, and figuring out how to deal with race conditions across the two. The simple, logical structure of the OCaml program gets torn apart if they try to write these two parallel logic rules by hand.

They deal with this in the Frenetic network controller. This is a DSL for programming OpenFlow networks, and it has boolean predicates, several policy composition operations and compiles to OF flow rules. It implements ideas from several papers (ICFP/POPL/SIGCOMM/PLDI) and is fully open source on GitHub. (frenetic-lang/frenetic).

They made a transition from Haskell to OCaml for a few reasons. They specified several parts of the Core compiler and runtime in Coq, and found Haskell/Coq extraction very painful (specifically, they had to abandon Coq functors, which had helped them discover key algebraic properties for their work on Kleene algebras). They found it easier for their collaborators to use OCaml than Haskell in general too.

Lwt programming is mind boggling for beginners although the syntax extension does help, and the lack of multicore is a future problem as the controller needs scale up. Overall, they find that hacks at any layer are possible now and lots of projects have spun up!

Q: multicore is a common criticism, but could you give an example of how you would actually solve this is you had shared memory vs a fork model?

A: There are several networking algorithms in the literature, and its a barrier for consumers. Nate notes that they could write one by hand but there's a time issue there and would rather have it automagically.

PHP Program analysis at Facebook

Pffff is all about deadcode removal, test coverage, checking for undefined function and use of undeclared variables, and syntactical grep rules. They also do taint analysis via abstract interpretation, type driven flow control and eventually (via Monoidics) a separation logic model (they use OCaml too).

This talk is about static analysis of a huge codebase (5m lines+ of PHP code is scary!). Since he had a lot of big monitors, he built the tools to do analysis over huge codebases. He demonstrates this on the OCaml compiler itself (camlp4 is huge! boo!!! -anil). They can also zoom in in realtime to each box and eventually see the content of the file itself!

The goal is NOT to build an editor, but to analyse and explore large code bases. It opens and editor if required any time. The idea is to transform semantic program analysis into a visual formt that's more easily digestible. Just like in Google Maps where you see the big important roads and only the details when you zoom in. He uses this tool all day on his own codebase too, and gradually realised that when you want to learn a new codebase, you want to start to learn the software architecture first, and then want to figure out the dependency graph before seeing details.

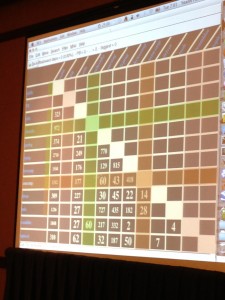

He then shows an interactive grid map that explains the dependencies of whole subdirectories of the OCaml compiler, and drill down to understand what they're for.

Audience q: did you consider a flare graph? a: others have done edge graphs and graphviz etc, and none of these scale to quite the heights that this particular grid done. The ordering of the grid is also the transitive order of dependencies, so it starts with the list of simple dependencies (stdlib) and ends with the toplevel (depends on everything). You can spot for example the OCaml dev rule that things in stdlib are only used by the compiler.

With good software, the top right of the grid is full, which is forbidden by OCaml (no cyclic module deps). With other software, the situation is far worse (audience laughter).

There are many other tools (CodeQuery, stags, sgrep/spatch and scheck). Â There are plugins for PHP, OCaml (using the cmt options) and Java (using Joust), C/C++ (using clang) getting all the semantic information from varied source code. Â There is a plan to move it to the web (from a GTK interface) by using Ocsigen.

Conclusion: CodeMap is a scalable semantic source code vis/searcher for huge codebases, and codeGraph visualizes dependencies. Â Future work is to minimize backward deps.

All on Github at github.com/facebook/pfff.git

Q: can you insert other metadata such as Linux perf output?

A: yes! (I think)

Extension points: an alternative to camlp4

Leo White talking about alternatives to camlp4. Why? Camlp4 is very complex and it comes due to its support for an extensible grammar. Did a wg-camlp4 working group to figure out how to evolve common uses towards a more easily parsed standard, to reduce the day-to-day dependency on camlp4. After 400 emails, a consensum emerged.

sexplib as an example: the old version was

type t = {

float: int default(42);

bar: float;

} with sexp

The annotated version is:

type t = {

foo: int; [@default 42]

bar: float

} [@@sexp]

This has annotation data that is passed straight through to a transformer, with no extensions to the grammar. Thus the sexplib transformer can understand with @default 42 means, and editors can easily hide or fold annotations without having to understand their details precisely.

The compiler ignores any AST extension fragments that remain after all the transformers are done.

sedlex

[%lexer

match foo with

(Range ('a','z') | Range(1000,1500)), 65 -> Foo

| Star (Range('a','z')) -> Bar

]

These are genuine AST nodes and so if these aren't handled by the transformer, an error will result. Another example is

properly foreign syntax, via COW (Caml on the Web)

let world = "world" in

let html = [%html {|<h1>Hello $str:world$!</h1>

|}]

There's also a shortcut convention for the syntax, such as:

match%lexer foo with

{|

There's a shorthand form to avoid have lots of brackets due to deeply nested lets.

[%lwt let x = f () in [%lwt let y = g () in

These have all been implemented by Alain Frisch and merged onto trunk (in OCaml 4.02.0dev). Thoughts and feedback are very welcome on the wg-camlp4 list

Q: how does this affect the quality of error messages?

A: Leo -- do you mean error messages from the extension itself?

Q: what if there is an error in the extension?

A: The 42 that was in the default has an AST fragment and so the transformer will transplant the location. A sensible transformer will therefore be nonlossy.

Q: can you chain transforms?

A: yes, thats why we are going with an AST fragment rather than a string, so chaining is easier. If youve got some macro expansion transformer and you want to use it with the sedlex example, they are executed in the other they are given on the command line. The next transformer will just see the resulting AST fragment, and therefore should compose between than camlp4 extensions have in the past.

Q: do you have ideas about hygiene as Scheme does?

A: Not particularly in this case, although Leo has some ideas for future systems.

Q: Ashish Agarwal -- do existing extensions need to be reimplemented

A: Good thing about all these existing extensions is that they have an existing parser, so you can take your existing implementation in camlp4 and mechanically translate into an extension_points version!

Q: Yaron: is anyone thinking about good libraries (hygiene etc)

A: Alain Frisch has merged in ppxtools into OPAM stable (that depends on 4.02.0+)

Q: Will this improve performance over camlp4

A: Parsing is an expensive process and this is still doing marshalling. The cost is often the case that we are shelling out to this other process, so we still need to look into improving performance in other ways.

GPGPU programming with SPOC

M Bourgoin presents his PhD work on SPOC.

GPGPU is odd hardware: several mutiprocessors, dedicated memory, connected via PCI-E and transferred between a GPU and host using DMA. Currently hardware has 300-2000 cores vs 4-16 CPU cores (wow). However this demands a very specific progamming model to take advantage of all this hardware.

Shows an example of a vector addition in openCL

__kernel vac_add(global const double *c, global const double *a, global double *b, int N) int nIndex = get_global_id(0); if (nIndex >= N) return; c[nIndex] = a[nIndex] + b[nIndex]

To actually use this though, you need a massive program to set up the kernel and execute it. It does the transforms, initialization, scheduling and so forth.

OCaml and GPGPU complement each other, since we have sequential high-level OCaml and highly parallel and low-level GPGPU. So the idea with SPOC is to use OCaml to develop high level abstractions in the GPGPU and program it from OCaml.

SPOC is a stream processing engine in OCaml that emits Cuda but lets it be more hardware independent.

let dev = Devices.init ()

let n = 100000

let v1 = Vector.create Vector.float64 in

let v2 = Vector.create Vector.float64 in

let v3 = Vector.create Vector.float64 in

let k = vector_add (v1,v2,v3,n)

let block = {blockX = 1024; blockY=1; blockZ=1}

let grid={gridx=(n+1024-1)/1024; gridY=1; gridZ=1}

let main()=

random_fill v1;

random_fill v2;

Kernel.run k (block,grid) dev.(0);

#printf of result

SPOC takes care of transferring the kernel to the GPU and back and handling the scheduling and exchange of data. Vectors are very similar to Bigarrays in that they are external memory.

We want: simple to express, predictable performance and extensible libraries, and for current high performance libraries to be usable more safely.

Two solutions: interop with Cuda/OpenCL kernel (better perf and more compatible but less safe). There is also a DSL in OCaml called Sarek, which talk focusses on.

Sarek vector addition has an ML like syntax, and the language features monomorphic, imperative, toplevel and statically typed checked (with type inference). Its statically compiled to OCaml code and dynamic compilation to Cuda and OpenCL.

SPOC+Sarek achieves 80% of hand-tuned Fortran performance and SPOC+external kernels is on par with Fortran (93%). It's type safe, 30% code reduction, memory managed by the GC and there are fewer memory transfers. This is ready for the real world and has great performance! Available on OPAM.

Great performance, portable, great for GPU and for multicore CPU and nice playground for future abstractions.

Who can benefit?

OCaml programmers -> better performance

HPC programmers -> much more flexible and extensible than current state of the art.

Future work: Sarek needs custom types, function decls, recursions, exceptions, and to build parallel skeletons using SPOC and Sarek.

Why not use Sarek for multicore? Well, here's the results!

- ParMap: data parallel and similar to OCaml mapfold

- OC4MC: posix threads, compatible with current OCaml code

- SPOC : GPGPU kernels on a CPU, mainly data parallel and needs OpenCL.

Simple program to calculate power series and matrix multiplication.

Power: OCaml 11s14, Parmap 3s30 SPOC <1s

Matmul: OCaml 85s OC4MC 28s and SPOC 6.2s

Significant improvements using SPOC -- 11x for power series and 12x for SPOC. Running on a quad core intel i7.

SPOC is available via OPAM and compatible with x86_64 on Linux and MacOS X, and speaker would love feedback.

Q: Anil - how hard is OpenCL to install

A: easy if you know your hardware model and to the vendor website. Harder with multiple GPUs though.

A: if you want to target CPUs, you need to target OpenCL and not CUDA.

High level performance optimisations for OCaml

Pierre Chambart at OCamlPro (funded by Jane Street). OCaml is fast, but not an optimizing compiler (it has predictable performance, good generated code, but what can we do to make it go faster?).

A small modification to high level code shouldnt influence the low level bits.

let f x = let cmp = x > 3 in if cmp then A else B let g x = if x > 3 then A else B

The second bit of code is faster than the first here since 'g' is spotted by the compiler peephole optimizer.

let g x = let f v = x + v in f 3

Abstract code should be compiled less abstractly. Above, we want to inline f, but a closure is still allocated per call unnecessarily. But we also dont want the compiler to be too smart and lose predictability!

Currently compiler pipeline has a parsetree - typed tree - lambda (untyped) - clambda (lambda+closures) - cmm (simple C like) and then mach (instruction graph similar to llvm) and then lin (assembly like).

Details: typed tree to lambda (construct elimination) - lambda (high level simplication such as reference elimination and pattern matching generation) - lambda to clambda (inlining, constrant propagation and book keeping in an intricate recursive loop) - clambda to cmm (unboxing, lots of peep hole checks for boxing/unboxing and tagging/untagging) - cmm to mach (instruction selection and some more peephole) - mach (allocation fusion, register allocation and scheduling)

Where do we do high level optimizations then? For example, where do we do inlining (since it allows other transformations to apply). typedtree is too complicate, lambda (want inlining, simpler with closures), clambda (is too difficult to improve, and Pierre did try) and cmm (good for local optimization but not global) and mach is architecture specific.

They are adding a new intermediate form between lambda and clambda known as flambda. This needs a highlevel view of values, simple manipulations, explicit closures and explicit value dependencies. lambda to flambda introduces closures, and flambda to clambda is mainly book keeping (and preparing cross-module information). The magic for optimization will be in flambda-flambda passes, and not in the flambda to clambda layers.

Shows several examples of inlining, simplication and dead code elimination and why its significantly easier in the flambda form for simple microexamples. Also shows lambda lifting!

Change the performance model. Now its WYSIWYG, but we want some sort of understandable compile-time evaluation. For example, consider a map function as a metaprogramming function that you want to execute at compile time without all the intermediate closure allocation.

Add build_test to your OPAM to make it easier to test the compiler, and Obj-using code is very likely to break if you do certain mutations behind the compiler, or dead code elimination might happen if you use a value behind the compiler's back.

Q: SimonPJ: why is it easier to do closure conversion first, as it's a lot easier in GHC to do the opposite. There are now two ways to get a value in scope doing it the other way.

A: In Haskell you have everything in your closure, since you get the information from the closure.

Q: Think of lambda calculus with let

A: We do flow analysis to get around this!

Q: This is quite complex. Is it whole program analysis?

A: Restricted to a single compilation unit, and a few other cases, not cross-module yet.

Q: Yoann: if the prediction is "its always faster" then why do you care about predictability?

Replacing the format string hack with GADTs

Benoit Vaugon talks about how to improve the typing of Format strings in OCaml by using GADT encodings. He stats with showing basic examples of the Printf module, and advanced examples that include the "%a" callback, date scanning and hex dumping.

The OCaml type checker has an inferred type that encodes the details of the format string in a "format6" type that has lots of type parameters that describe the format string contents.

During type checking, the literal string is parsed and there is manual inference of the format6 type parameters. At runtime, the format strings are represented by the string from the source code.

During printing functions, it parses the format and count parameters and accumulates parameters. It then extracts and patches subformats, and finally calls the C printf function. Scanning is more complex but broadly similar.

There are quite a few problems with this. There are 5 (!) format string parsers (two in Printf, two in Scanf, one in the type checker) and there are lots of little incompatibilities as a result. For example

Printf.printf "%1.1s" "hello"

Results in Invalid_argument exception despite the static type checking, with a useless error message.

There is a weakness in the type checker too, for example

Printf.sprintf "%s.+f" 3.14

results in the format string being printed.

You can also segfault from there, such as

Format.printf "@%d%s" 42 "hello"

will segfault.

Speed is also a concern, as parsing the format at runtime is slow, and reparsing is required by another slow C printing function. Lots of memory allocation is required.

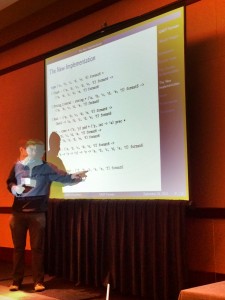

The new implementation sorts all this out by using GADTs to represent the formats. The format6 type is now concrete and not magic predefined. Formats now also become statically allowed and not multiply passed around dynamically.

The new implementation (which he shows) is a fairly epic GADT but quite regular when broken down into pattern clauses and examined with respect to the old implementation.

Issues: evaluation order changes slightly, as for printing functions the parameters are accumulated before printing. String_of_format is implemented by "%identity" and in the new implementation, we need to change the representation (either regenerate the string from the GADT or implement formats by a tuple).

There's now only one format parser (down from 4-5) for the standard library and OCaml type checker

type ('b,'c,'e,'f) fmt_ebb = Fmt_EBB:

('a,'b,'c,'d,'e,'f) CamlinternalFormatBasics.fmt ->

('b,'c,'e,'f) fmt_ebb

val fmt_ebb_of_string : string -> ('b,'c,'e,'f) fmt_ebb

val type_format: ('x,'b,'c,'t,'u,'v) format6 ->

('a,'b,'c,'d,'e,'f) fmtty -> ('a,'b,'c,'d,'e,'f) format6

(this looks verbose but isnt really exposed outside of the compiler.

Another issue is the "%(..%r..%)" construction, since we need to include a proof term of the number of "%r" occurrences. The encoding for this is quite complex.

Performance of the new system is significantly better across the board. A hello world goes from (230ns to 55ns) timing and (732 to 24 bytes allocated) for example.

Q: Oleg did it already in Haskell (ha ha -- editor). He notes that six parameters arent needed, as the GADT is the interpreter over the paramters.

A: yes can remove them if we split the Printf and Scanf format, and there is also a format4 which removes several parameters. We also have a problem with error messages since all the type parameters make things really complicated.

Q: Oleg: the work of interpreting the stream is pushed into the GADT, but if you write an interpreter over the GADT then we have simpler external types.

...discussion moves into offline discussion.

Dynamic types in OCaml

Gregoire Henry wants to do dynamic types in OCaml.

- Structural type introspection: generic I/O primitives, type-safe (unlike Marshal) and typed (not written in Camlp4) -- these are polytypic functions.

- Normal type introspection, for dynamic values (a k/v store or a DSL with dynamic typing), or extensible polytypic functions for abstract types.

- This would also give us a common type representation for (e.g.) Eliom services that will be converted to Javascript, or for FFI libraries, and to let the debugger explore the heap with exact typing information.

Probably isnt a single representation that fits all these use cases: We need to preserve abstraction when desired, but also to break abstraction when desired (e.g. by the debugger or a generic printer, but not by accident!), and it shouldn't impose a big performance hit.

Gregoire gives a description of a polytypic printing function

let rec print (type t) (ty: t ty) (v:t) = match head ty with |Int -> print_int v | String -> print_string v | List ty -> print_list (print ty) v | Sum desc -> let (name, args) = sum_get desc v in print_string name ...

We define a predefined type "t ty" and a syntax for type expressions "(type val t) of type "t ty". We then introduce implicit type arguments which are optional arguments instantiated at call-site with the dynamic representation of the expected type.

val print : ?t:(typ val 'a) -> 'a -> string let print ?(type val t) (v:t) = ... type t = R of int * int | ... let x = R (1,2) let () = print x (* implicit arg is (type val t) *)

This is experimental syntax, but is shows how we can keep the representation abstract but the printer can still operate over it since the implicit argument passes the printer around.

The main problem is now how we mix polytypic function and abstraction, without always printing "abstract" instead of the real underlying structure. One solution is an extensible polytypic function.

module M: sig type t val x: t end = struct type t = R of int * int let x = R(22,7) let () = register_printer (external type val t) (fun x -> ... ) end let () = print x

We register a printer internally in the module, without exposing its internal type externally, but still let it be printed. The internal and external types are different though. We do need to figure out a canonical name for types defined outside the current compilation unit (its absolute path).

By default though, abstraction should consistently introduce new nominal types, but we're not sure how to reference all the of the external names of a given type within its initial compilation unit (open problem). A pragmatic approach to solving this is the manual or semi-automatic creation of runtime "type names".

In conclusion, they have a GADT for structural introspection and a RTT representation with global names. Â There is also a type-constructor index association table. Â Implicit type arguments (lightweight syntax for calling polytypic function, and explicit type parameters for polymorphic functions).

Q: what's the gain of putting this into the compiler?

A: you may miss language corners, such as firstclass modules or functors, as your "userspace" OCaml code has to pass a type witness into a functor or firstclass module. Â Compiler support makes this passing much easier. Â The other big benefit is clear error messages from this compiler integration in the long term. Â It's not currently easy for beginners, and this is very important to get right if this becomes a supported feature.

On variation, injectivity and abstraction

Jacques Garrigue speaks about an interesting bug in the type checker that needs some language changes. There was PR#5985 which was a serious type checker bug that lost injectivity.

module F(S: sig type 'a s end) = struct include S type _ t = T : 'a -> 'a s t end module M = F (struct type 'a s = int end) let M.T x = M.T 3 in x - : 'a = <poly> the type is lost!

After expanding "s", the definition of "M.t" is actually "type _t = T : 'a -> int t" but "'a" is not marked as an existential.

In order to protect against this unsoundness, all variables in type declarations must be bound, either by appearing inside the type parameters or be existentially bound (i.e. only present in GADTs). Inside type parameters, these variables must be injective.

OCaml doesnt do injectivity directly, since it relies of variance inference (since we have subtyping). The variance of a parameter is either explicit for abstract and private types or constrained parameters, or its inferred from its occurrences otherwise.

Constrained type parameters have been present since the very start.

type 'a t = T of 'b constraint 'a = 'b list

Rules for checking variance in this case become much more complicated, since constrained parameters variance must now be explicit The variance of type variables inside constrained parameters must also be weaker or equal the version inside the body of the definition.

type +'a t = T of 'b constraint 'a = 'b list (* 'b covariant *) type 'a r = 'a -> int (* contravariant *) type +'a t = T of 'b constraint 'a = 'b r (* Fails *) (* 'b is contravariant in parameters but covariant inside *)

In OCaml though, the variance of a parameter is allowed to be weakened through abstraction.

module M : sig type +'a u end = struct type 'a u = int end

This is correct for the types, but becomes incorrect when used for type parameters.

module F(X: sig type 'a r end) = struct type +'a t = T ob 'b constraint 'a = 'b X.r end module N = F (struct type 'a r = 'a -> int end)

Even when we assume that r is invariant, 'b is inferred as invariant from the parameter of t, which overrides the covariance of the body. Meanwhile in the module N, the variance is now wrong.

How do we fix this? We need to refine the definition of variance, as traditional variance subumption defines a lower bound on the variance, but we need to add some upper bound information too, to be sure that parameters cannot have a strong variance.

(technical explanation of how to compose variances omitted here)

In OCaml 4.01, full variance inference is done using 7 flags (the last is a special case required for principality). Some uses will no longer type check so programs may be rejected for safety.

In the future, Jeremy Yallop, Leo White and Jacques would like to add injectivity annotations for abstract types.

type #'a s type _ t = T : 'a -> 'a s t

Add new types for isomorphic abbreviations, as Haskell does

module M : sig type #'a t val f : int -> ['pos] t end = struct type 'a t = new int let f x = (abs x : int :> 'a t) end

This is similar to private, but subtyping will work in both directions -- this is useful for efficiency, encoding runtime types and allows coercions to be delayed until the signature rather than in the both (in the example above, the coercion in "f" could be omitted) due to the signature.

Another problem is that one cannot prove the uniqueness of abstract types, and we dont know whether an abstract type is contractive (so even if you use rectypes, the following functor can never be defined)

module Fixpoint (M: sig type 'a t end) = struct type fix = fix M.t end Error: the type abbreviation fix is cyclic

One also never knows whether an abstract type may be a float, so the float array optimization may be mismatched.

OCamlot: lots of OCaml testing!

(will post David's slides on the OCaml Labs website since it's one of our talks. Will edit this post when that happens).

Merlin: editor support for OCaml

Merlin is a sophisticated editor assistant for OCaml that provides autocompletion in vim and emacs. They leap straight into an interactive demo that's awesome: autocompletion, module introspection when writing a functor, and so on.

Unlike other tools, this doesn't rely on a CMT file, so code doesn't need to compile (except for source browsing). Your project can have type errors when using Merlin!

It integrates with the ecosystem and has direct support for Findlib. There is no direct support for camlp4 however, with specific support for some extensions. If you want a new shiny extension then talk directly to them

There is build system support for running either omake or Jenga in polling mode (for iterative recompilation). Omake rules can inform Merlin to get a consistent state of the project!

A typical session: Parsing is done in two stages and the OCaml parser has been modified to be much more resilient to errors. The usual typing rules are applied, but passed onto a more "relaxed" typers in case of errors to give some support for incremental parsing.

Coming soon is documentation (ha!) as well as retrieval for ocamldoc comments to further augment hints, as well as even more error recovery heuristics. Some of the patches will also be submitted upstream to improve error handling (by accumulating them instead of printing them directly).

It's available on OPAM under an MIT license via "opam install merlin" and thanks to Jane Street for letting them work on it during an internship there, and all the bug reporters!

Q: Have you considered running Merlin on a remote system and speak the same JSON protocol?

A: They haven't, but it really shouldn't be a problem.

Q: Julien V: do we have to build first?

A: No you dont have to build first

Q: What happens when you change branches in a project

A: For project-wide information you need to compile and rebuild.

Q: in the second pass of type checking, what does "relaxed" mean

A: When typing fails for some branch of the AST, in the simple case we introduce a fresh type variable and attempt to do unification with the result of this. This will never be sound, but lets it make progress and give SOME feedback to the user.

Q: is this a new type checker?

A: No its the original type checker with augmented error handling paths to do more unification.

Q: is the relaxed checking really worthwhile? It wasnt a lot of use in gcc since you get a lot of followon errors that are meaningless.

A: lots of discussion, opinions split, it can be disabled!

Understanding memory behaviour of programs

Cagdas B doing a PhD on memory profiling. He wants to study the behaviour of OCaml programs and work on decreasing memory footprint and generally reduce GC pressure on real OCaml programs.

Shows Why3 graph (see photograph) and they're running nice finegrained analysis over it on real world analyses. How do they generate these graphs?

$ opam switch 4.00.1+ocp-memprof $ opam install why3 $ OCAMLRUNPARAM=m why3replayer.opt -C why3.conf p9_16

Patched compiler and then the OCAMLRUNPARAM will generate a lot of sampled snapshots of the OCaml heap. There's no need to change the code or the compilation options. Then install analysis tools

$ opam install ocp-memprof $ ocp-memprof -loc -sizes PID

The final step analyses all the snapshots. But what is a snapshot? It's a compressed version of the OCaml heap, with location ids, a graph with pointers, and has saved globals (toplevel modules). We obtain the snapshots by computing a linear scan of all the heap values.

The OCAMLRUNPARAM=m forces the program to generate a snapshot after every major GC, or request the program to generate a snapshot via a HUP signal, or via a new "dump_heap" function that's been added to the GC module.

It works by reserving some space inside the standard OCaml value block, and so there is minimal runtime performance impact except when dumping out snapshots after a GC. There's no space overhead since the header size isnt changed. However, it's only available on 64-bit platforms and reduces block size to 64GB. Ran experiment with OPAM and location identifiers are limited.

There's one tool based on the snapshot identifiers and the "memprof" tool gets all identifiers from the heap and the corresponding location, and then looks up the right types from a specific location using cmt files (the typed AST).

Plan for this work is to improve the current framework (aggregate information by type and location) and to recover more types, and build more tools around it!

Q: Roshan: one common usecase is when grabbing locations, its boring to see Array.create and it would be nice to see a backtrace of the execution to see where the allocation happened.

A: this is a problem indeed (discussion about the ongoing Mark Shinwell patches too, and previous talk about backtrace analysis from last years workshop).

Benchmarking in Core

Roshan James about microbenchmarking in JS Core. Precise measurementis essential for performance critical code. We want to measure the execution cost of functions that are relatively cheap. Functions with a short execution time that run millions of times in a tight loop.

The essence of benchmarking is to run a time loop (like Time.now) a lot of times. We need to figure out how to compute a batch size to account for the time it takes to run the actual timing measurement. Similar to Criterion for Haskell, where they loop Time.now to figure out how long it takes to compute the time itself, and then run the benchmark function, and compute a full estimate. This works ok, but it requires density plots to let you visualise the form of the error (bimodal, or something else, for example).

There is a lot of noise in microbenchmarking, such as delayed costs due to GC, and variance in execution times is affected by batch sizes. So if we vary the batch size, the variance changes quite a bit since the GC is running in different intervals during the batches. The GC noise has a periodicity though!

They treat microbenchmarking as a linear regression, and then fit execution time to a batch size. They vary the batch size geometrically to get a better linear fit (shows a very linear graph). Theres now no need to estimate the clock and other constant errors, since they are accounted for by the y-intercept which shows the constant costs.

Other costs can also be predicted in the same way, such as estimate memory allocations and promotions using batch size too. This also allows measuring a goodness of fit by using R2 and bootstrapping techniques.

Example use of Core_bech is really easy:just

open Core.Std open Core_bench.Std let t1 = Bench.Test.create ~name:"id" (fun () -> ())

Is the identity run for example, and just put something more complex in there. Output is an Ascii_table with lots of columns showing interesting results.

Some functions have a strange execution time, and the observation isnt a great fit. Between runs, they also directly query the GC and directly query how many times some things have happened (minor collections and so on), and include these along with the batch size in the predictor. With multiple predictors, they are really close to the observed results indeed.

(lots of interesting maths about how they perform runtime cost decomposition)

Its available via "opam install core_bench" and they want to expose more predictors by measuring the effect of live words on performance, and counters for major collection work per minor gc. Accuracy of results is interesting as least-squares is susceptible to outliers so they can incorporate the knowledge that the measurement is heavy-tailed.

September 25th, 2013 - 08:12

Thanks again for sharing ! I’m impressed that you managed to write down that amount of detailed information during the talks.

Anyone pressured the Facebook guys to make an OPAM package of pfff yet ? They are the only ones not to mention it, hehe (except for some of the compiler stuff)

September 26th, 2013 - 13:12

Thanks Anil for summarizing this event so quickly!

September 26th, 2013 - 16:52

Yes, I asked them, but it sounds like they are relying on many small libraries, they would have to package before (I wouldn’t care about putting everything in one big package, but Thomas doesn’t like that idea)